Basic/LatentNeRF

Refined/SJC

Complex/3DTopia

Fantastical/Consistent3D

Grouped/DreamFusion

Action/One-2-3-45++

Spatial/TextMesh

Imaginative/Magic3D

Basic/LatentNeRF

Refined/SJC

Complex/3DTopia

Fantastical/Consistent3D

Grouped/DreamFusion

Action/One-2-3-45++

Spatial/TextMesh

Imaginative/Magic3D

Abstract

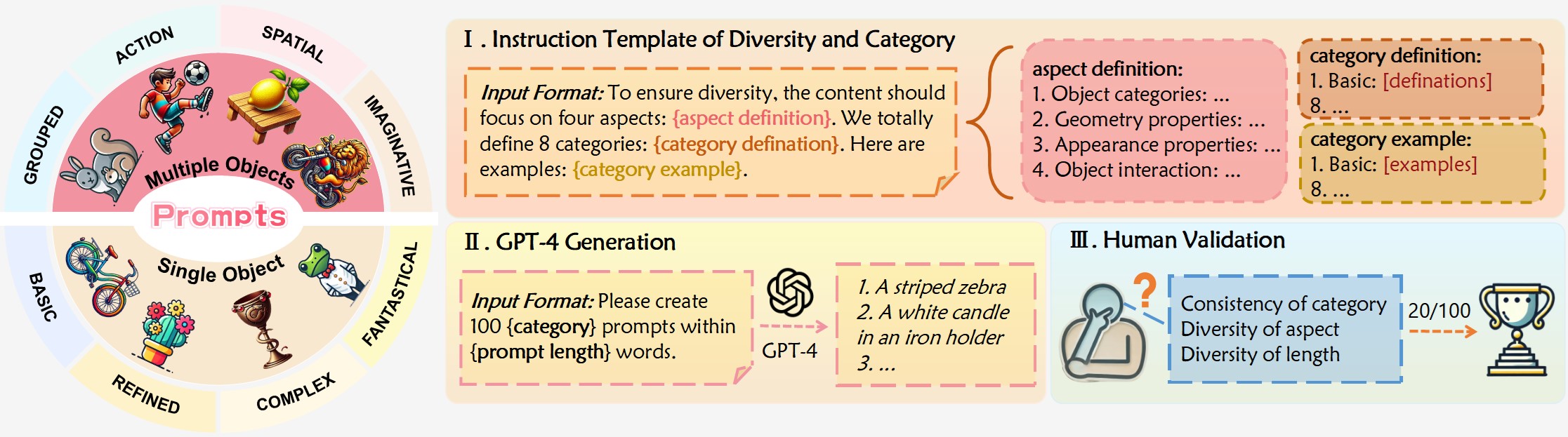

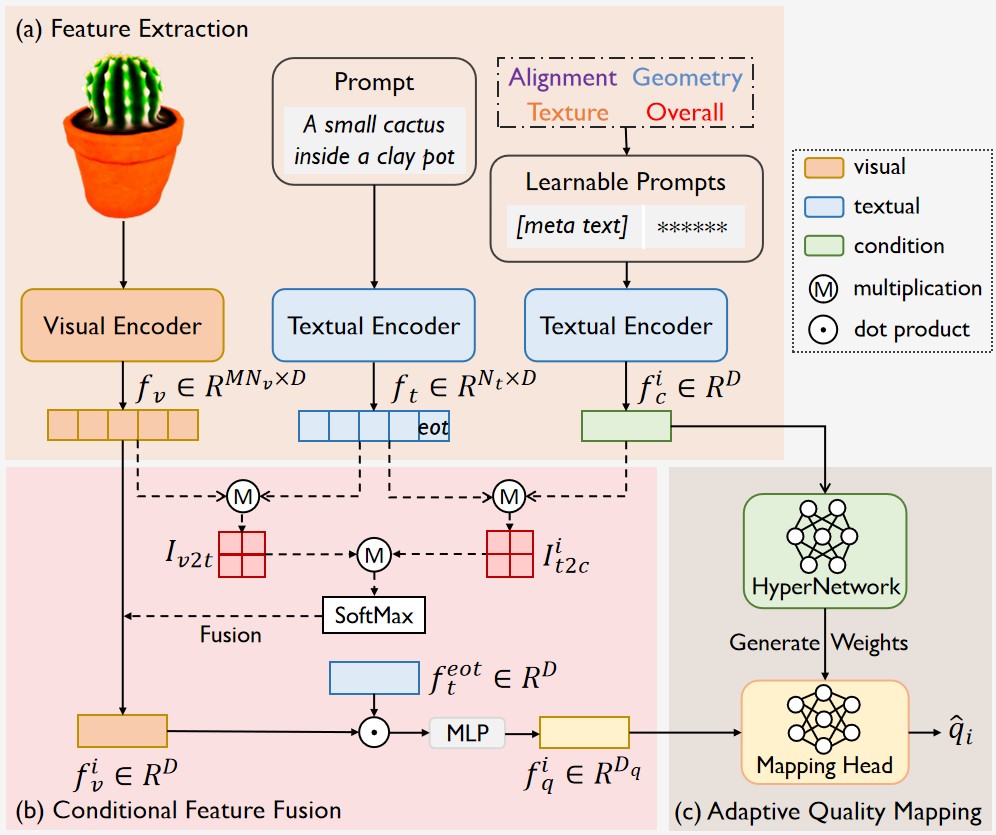

Text-to-3D generation has achieved remarkable progress in recent years, yet evaluating these methods remains challenging for two reasons: i) Existing benchmarks lack fine-grained evaluation on different prompt categories and evaluation dimensions. ii) Previous evaluation metrics only focus on a single aspect (e.g., text-3D alignment) and fail to perform multi-dimensional quality assessment. To address these problems, we first propose a comprehensive benchmark named MATE-3D. The benchmark contains eight well-designed prompt categories that cover single and multiple object generation, resulting in 1,280 generated textured meshes. We have conducted a large-scale subjective experiment from four different evaluation dimensions and collected 107,520 annotations, followed by detailed analyses of the results. Based on MATE-3D, we propose a novel quality evaluator named HyperScore. Utilizing hypernetwork to generate specified mapping functions for each evaluation dimension, our metric can effectively perform multi-dimensional quality assessment. HyperScore presents superior performance over existing metrics on MATE-3D, making it a promising metric for assessing and improving text-to-3D generation.

Average Scores

| Method | Single Object | Multiple Object | ||||||

|---|---|---|---|---|---|---|---|---|

| Basic | Refined | Complex | Fantastic | Grouped | Action | Spatial | Imaginative | |

| DreamFusion | 4.99 | 4.96 | 4.64 | 3.24 | 3.45 | 3.73 | 4.47 | 3.75 |

| Magic3D | 5.26 | 4.89 | 5.06 | 4.95 | 3.92 | 4.73 | 5.06 | 4.93 |

| SJC | 3.28 | 3.48 | 2.95 | 2.92 | 2.50 | 2.77 | 2.92 | 3.25 |

| TextMesh | 4.08 | 4.56 | 4.79 | 4.22 | 3.22 | 3.68 | 4.17 | 4.26 |

| 3DTopia | 5.06 | 4.91 | 4.83 | 3.89 | 4.05 | 2.80 | 3.89 | 2.95 |

| Consistent3D | 4.15 | 4.92 | 4.40 | 4.32 | 3.37 | 3.64 | 4.39 | 3.47 |

| LatentNeRF | 3.20 | 3.76 | 3.47 | 4.16 | 2.94 | 3.48 | 3.46 | 4.01 |

| One-2-3-45++ | 7.79 | 7.69 | 6.50 | 6.60 | 6.49 | 5.65 | 6.81 | 6.13 |

Prompt Design

Proposed Evaluator

Performance Comparison

| Metric | Alignment | Geometry | Texture | Overall | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PLCC | SRCC | KRCC | PLCC | SRCC | KRCC | PLCC | SRCC | KRCC | PLCC | SRCC | KRCC | |

| CLIPScore | 0.535 | 0.494 | 0.347 | 0.520 | 0.496 | 0.347 | 0.563 | 0.537 | 0.379 | 0.541 | 0.510 | 0.359 |

| BLIPScore | 0.569 | 0.533 | 0.377 | 0.570 | 0.542 | 0.382 | 0.608 | 0.578 | 0.413 | 0.586 | 0.554 | 0.393 |

| Aesthetic Score | 0.185 | 0.099 | 0.066 | 0.270 | 0.160 | 0.107 | 0.288 | 0.172 | 0.114 | 0.228 | 0.150 | 0.100 |

| ImageReward | 0.675 | 0.651 | 0.470 | 0.624 | 0.591 | 0.422 | 0.650 | 0.612 | 0.441 | 0.657 | 0.623 | 0.448 |

| HPS v2 | 0.477 | 0.416 | 0.290 | 0.455 | 0.412 | 0.286 | 0.469 | 0.423 | 0.296 | 0.465 | 0.420 | 0.293 |

| CLIP-IQA | 0.151 | 0.039 | 0.025 | 0.223 | 0.125 | 0.084 | 0.264 | 0.163 | 0.108 | 0.213 | 0.115 | 0.076 |

| ResNet50 + FT | 0.577 | 0.551 | 0.387 | 0.669 | 0.653 | 0.471 | 0.691 | 0.672 | 0.487 | 0.649 | 0.632 | 0.452 |

| ViT-B + FT | 0.544 | 0.515 | 0.360 | 0.662 | 0.642 | 0.461 | 0.691 | 0.676 | 0.492 | 0.633 | 0.614 | 0.439 |

| SwinT-B + FT | 0.534 | 0.506 | 0.359 | 0.626 | 0.610 | 0.438 | 0.654 | 0.640 | 0.465 | 0.608 | 0.592 | 0.425 |

| MultiScore | 0.657 | 0.638 | 0.458 | 0.719 | 0.703 | 0.516 | 0.746 | 0.729 | 0.540 | 0.714 | 0.698 | 0.511 |

| HyperScore | 0.754 | 0.739 | 0.547 | 0.793 | 0.782 | 0.588 | 0.822 | 0.811 | 0.619 | 0.804 | 0.792 | 0.600 |

Gallery

BibTeX

@article{zhang2024benchmarking,

title={Benchmarking and Learning Multi-Dimensional Quality Evaluator for Text-to-3D Generation},

author={Yujie Zhang, Bingyang Cui, Qi Yang, Zhu Li, and Yiling Xu},

journal={arXiv preprint arXiv:2412.11170},

year={2024}

}